Now hiring!

When deciding on potential gas limit increases, client developers have to think like an attacker. They need to figure out what the worst-case scenario an adversary could target and how we can improve it.

Though Ethereum Mainnet is rarely attacked, it's important to measure client improvements under the average case on real mainnet load. Client teams usually do this by syncing two versions and checking for significant differences between them.

However, this approach is flawed. Since sync times are inherently variable, clients sync different heads, have different sets of peers, and so on. This lack of repeatability and high variance leads to misleading conclusions and hides real performance improvements or regressions that matter in practice.

At Nethermind, we developed a new benchmarking framework that removes the randomness of live-network testing by replaying the exact same mainnet blocks for every client. Instead of relying on whatever the network may produce on any given day, we use a fixed set of historical blocks that remain unchanged.

Each run starts from the same mainnet snapshot, on the same hardware, with no 12-second gaps between blocks. This ensures that every client receives identical input data under identical execution conditions, enabling fair comparisons of results.

Nethermind’s new, open-source, client-agnostic framework enables testing on a real mainnet database, rather than an empty or artificial state. Given that clients can behave differently when running with a database full of real clients, this framework reveals flaws and improvements that benchmarks running only on an empty state cannot properly capture.

It also introduces a faster feedback cycle: run → reset → improve → run again - always against the same workload. This helps iterate quickly on potential improvements.

Since the workload remains constant, the tests are fully repeatable. You can make a performance improvement today and measure its real impact tomorrow with confidence.

The benchmark supports two modes aimed at capturing different aspects of execution performance. Both run blocks back-to-back with no pauses, increasing the speed of execution and removing amortization effects. This setup also exposes how clients handle garbage collection and state writes without any “downtime,” processing blocks in a continuous stream.

This mode replays historic mainnet blocks exactly as they occur onchain.

This mode merges multiple consecutive mainnet blocks into a single, synthetic “super-block”. It preserves the exact transaction order from the original block range, but delivers them to the client as one extremely large block. This allows stress-test execution clients under conditions far beyond today’s mainnet limits, simulating future scenarios with significantly higher block gas limits or the kinds of large batches commonly produced on Layer 2 rollups. By doing so, it helps reveal performance bottlenecks, edge cases, and potential scalability issues in a controlled and reproducible way.

Running the same workload under the same conditions makes performance differences clear. Below are the results for both test types: real mainnet payloads and merged payloads.

Block replay and load generation are handled with K6, with results visualized in Grafana. Nethermind used modest hardware: an OVH Advance-2 class machine with over 2 TB of snapshot storage and standard CPU/RAM, to align with EIP-7870 and home-staker viability.

Using modest hardware avoids skewed results and keeps the benchmark representative of solo and home Ethereum operators today.

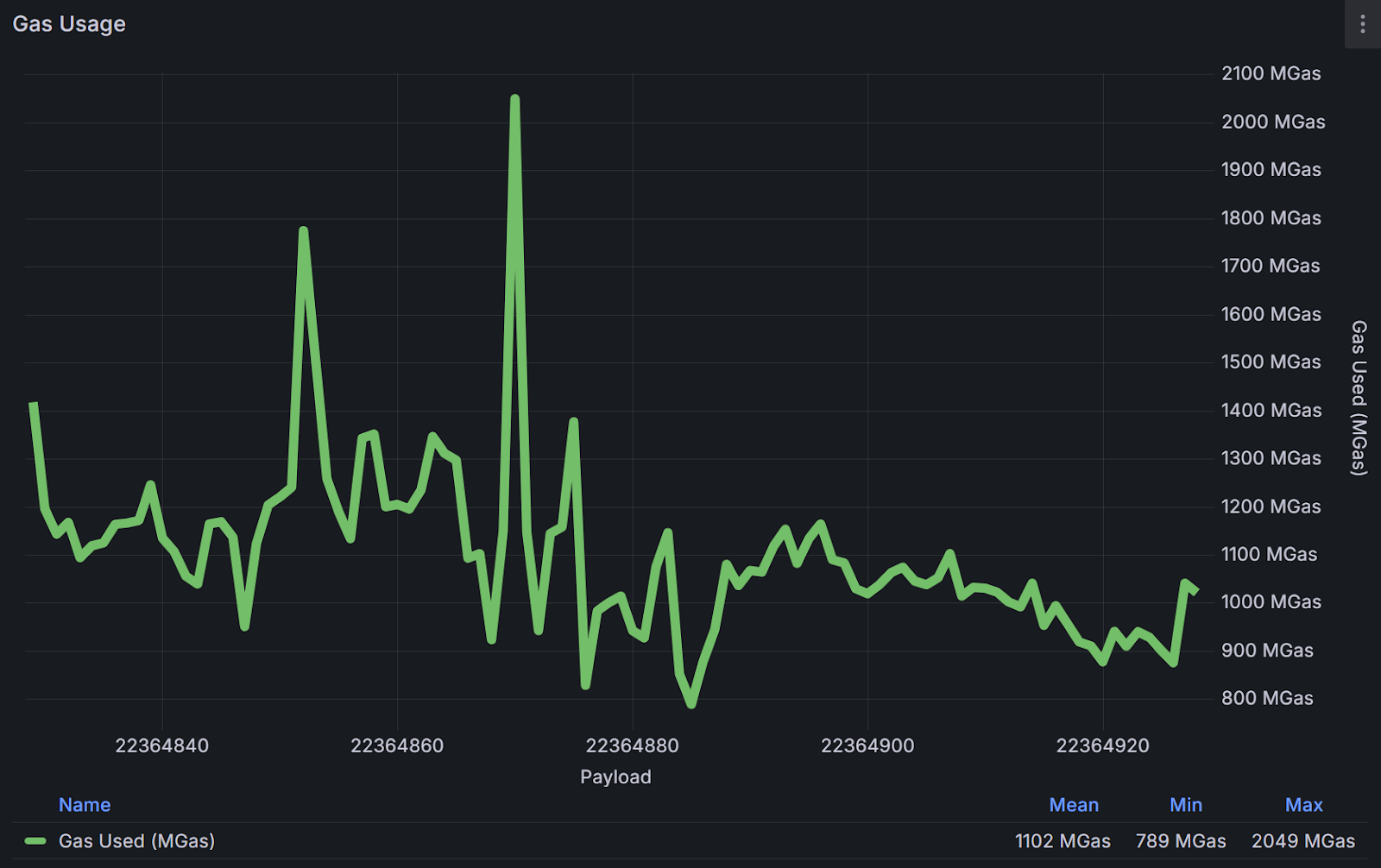

For the test, we selected a block from the range of 22.360.000 to 22.370.000.

Throughput (GGas/s) is used as the primary execution performance metric. It is calculated as the total gas consumed by a block, expressed in gigagas (GGas), divided by the block execution time in seconds:

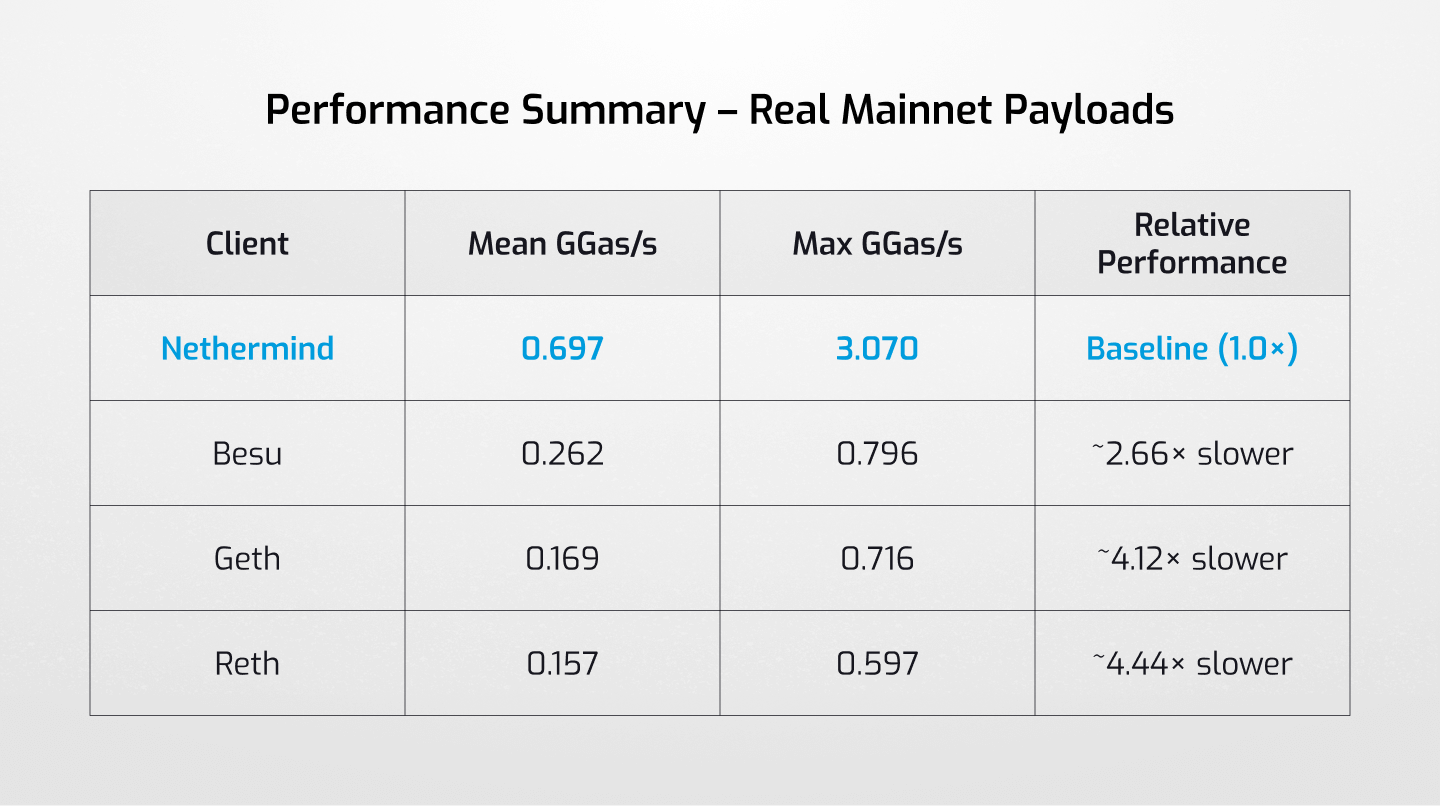

Nethermind executes the real mainnet blocks at a mean 697MGas/s, which is significantly faster than other clients under identical replay conditions, and maintains stable throughput throughout the block range.

This exemplifies the focus we’ve set over the last years on performance.

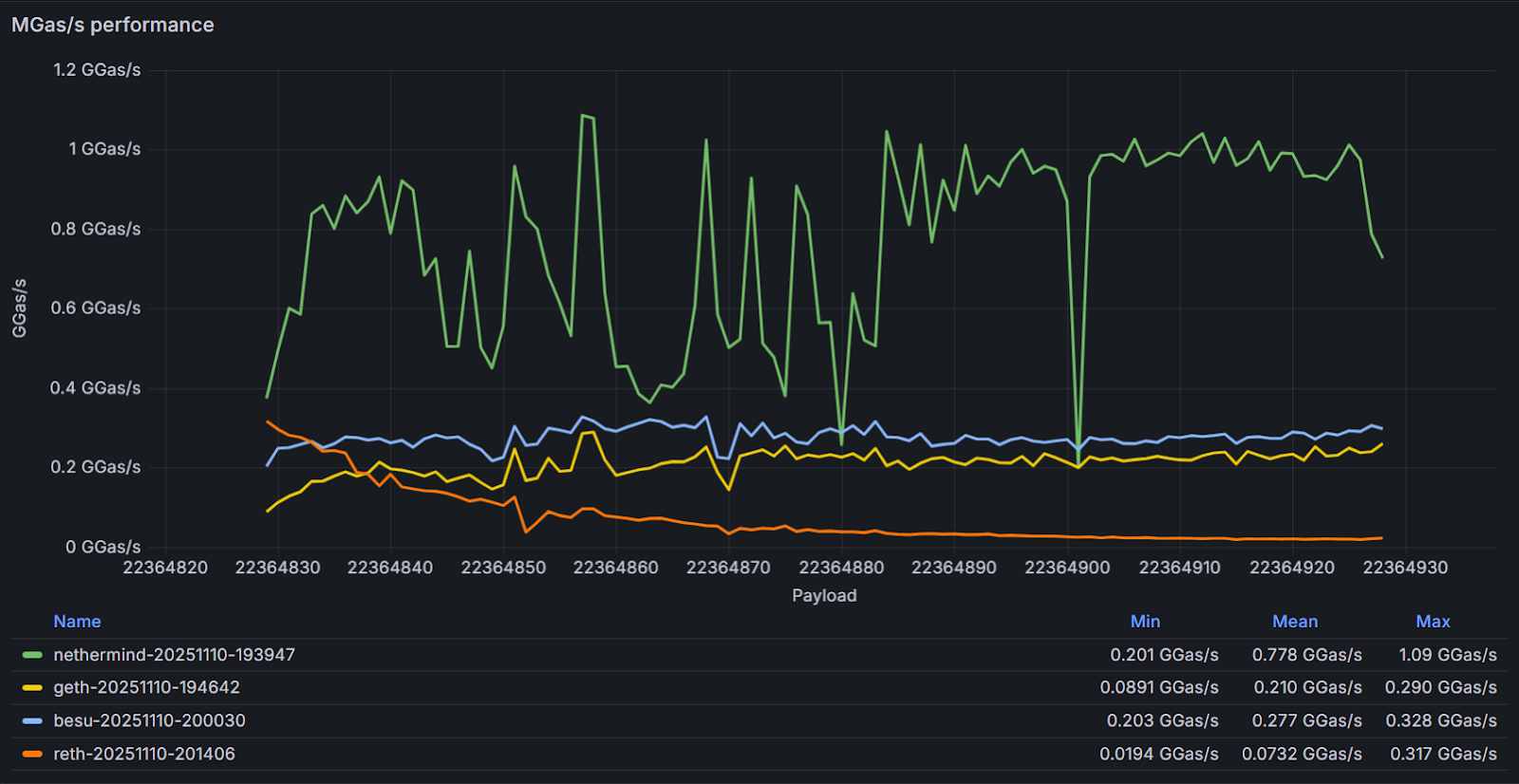

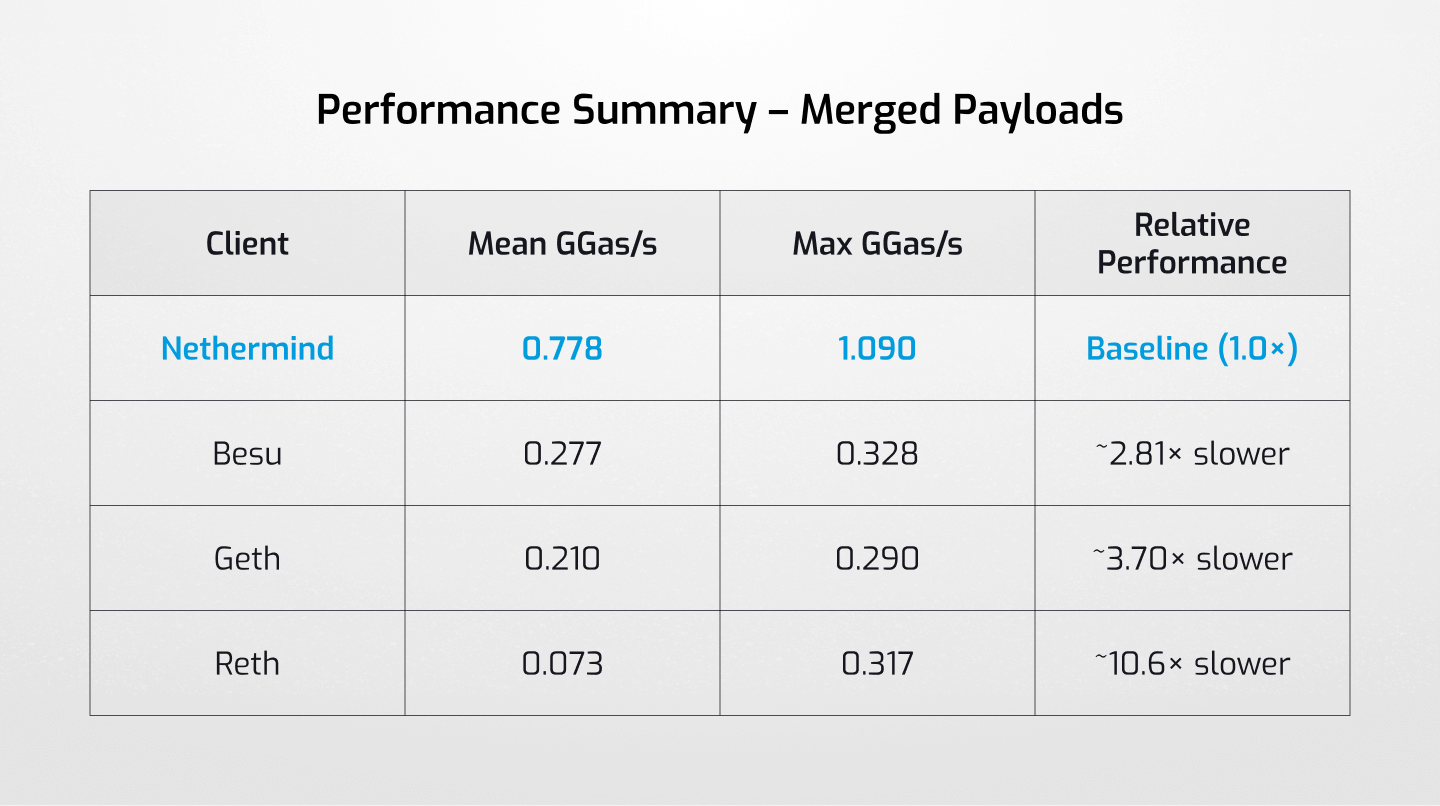

This test merges 100 consecutive real mainnet blocks into a single execution payload, averaging a block size of 1.1 GGas. It pushes clients far beyond typical mainnet block sizes and reveals deeper bottlenecks under extreme load.

Even when processing massive merged blocks, Nethermind continues to lead throughput by between 2x and 10x. While Besu and Geth improved their average processing performance, Reth interestingly experienced 2x performance degradation compared to the non-merged tests. This can point client teams to adopting some of the improvements we have made in Nethermind.

EDIT: The issue has been reported to Reth and the root cause has been identified. Tests will be rerun once improvements to the tooling are implemented.