Now hiring!

Nethermind introduces Zero-Knowledge Watermarking (zkWM), a cryptographic framework that achieves both public verifiability and privacy for AI-generated content, eliminating a fundamental trade-off in existing watermarking approaches.

As LLMs generate increasingly sophisticated text, verifying content provenance becomes critical for ownership, attribution, and responsible use. Watermarking embeds algorithmically detectable patterns into generated outputs, but existing inference-time techniques face an inherent constraint.

Public statistical tests allow anyone to verify watermarks but expose the detection mechanism. This enables adversaries to remove watermarks through token manipulation or forge them on unauthorized text. Private verification keys solve the exposure problem but must be shared with verifiers, creating trust dependencies that don't scale.

No existing approach achieves both public verifiability and privacy protection simultaneously.

The framework uses SNARKs to enable model owners to prove watermark presence or absence without revealing the watermarking procedure internals.

zkWM builds on established token-based watermarking, such as the approach by Kirchenbauer et al., where LLM vocabularies are partitioned into green lists (tokens favored during generation) and red lists. Two parameters control watermark strength: γ defines green list size, and δ controls how much those tokens are favored. This introduces statistically detectable patterns without significantly degrading text quality.

The zero-knowledge layer adds cryptographic verification. For each candidate text, the prover generates succinct proofs confirming:

Verifiers check proof integrity without accessing the partition secrets or watermarking parameters, preventing both watermark removal and forgery.

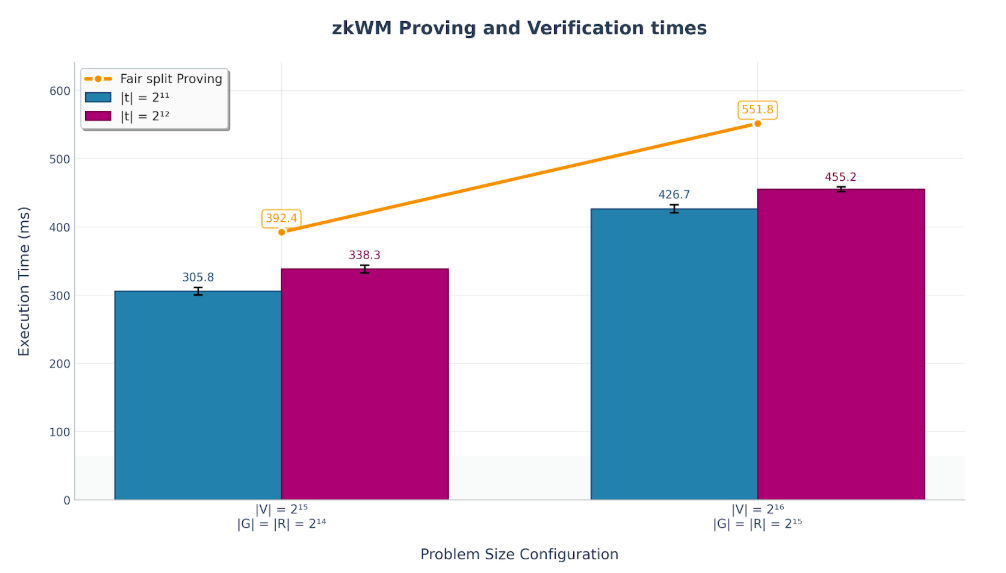

Benchmarks on a Ryzen 7 3700X with 64GB RAM demonstrate zkWM handles production-scale scenarios efficiently.

Verification completes in 305-455 milliseconds for text lengths of 2048-4096 tokens (approximately 1500-3000 words) with vocabulary sizes matching production LLMs (32768-65536 tokens). These times include both proving and verification for all cryptographic proofs.

This sub-second performance contrasts with full inference proving approaches, which verify that specific inputs produce specific outputs but require substantially more computation time.

Fast verification unlocks applications that weren't previously feasible:

The prover-verifier model separates capabilities appropriately. Model owners maintain full access to watermarking parameters and generate proofs on demand. Verifiers receive only the candidate text, cryptographic proofs, and public commitments to the watermarking secrets. This is sufficient for verification but insufficient for manipulation.

This architecture means verifiers don't need to trust the prover's honesty about watermark detection. The zero-knowledge proofs provide mathematical certainty that claimed watermarks exist (or don't) without exposing information that could enable attacks.

Read the complete technical specification: The technical note details the cryptographic construction, benchmark methodology, and extends foundational watermarking research from Kirchenbauer et al. to achieve verifiability, privacy, and robustness.