Now hiring!

AI has materially altered the Web3 threat model by lowering the technical barrier to entry. High-impact exploits once required deep, specialized knowledge of smart contract architecture. Today, capable AI models allow actors with strong intent but limited expertise to close that gap.

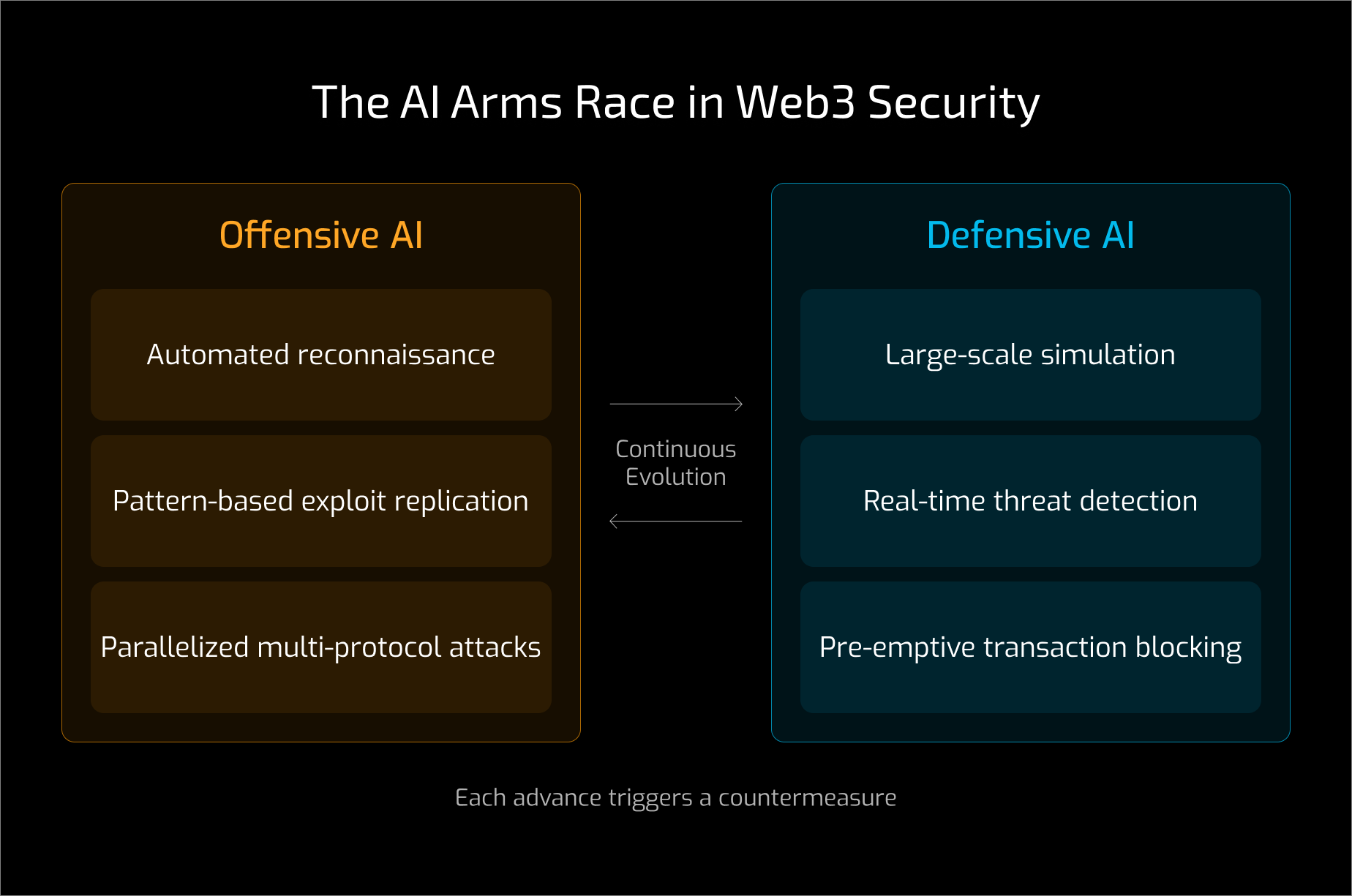

Attackers can now perform automated, large-scale reconnaissance. Instead of manually reviewing code, AI-driven scanners systematically probe thousands of smart contracts in parallel, identifying zero-day vulnerabilities across the ecosystem in a fraction of the time required by human researchers.

In an open-source, composable environment, AI introduces a contagion effect. Once a vulnerability is discovered in one protocol, AI can immediately scan for similar patterns across the ecosystem. An exploit can be replicated across dozens of protocols simultaneously, dramatically reducing the response window for defenders.

Attackers are increasingly using autonomous AI coding agents to tailor their exploits. These agents generate malicious code required to trigger specific vulnerabilities and simulate transaction sequences to maximize value extraction per target.

The traditional one-off hack is giving way to parallelized exploitation. AI can coordinate multiple complex workflows simultaneously, enabling synchronized, multi-protocol attacks. Without AI support, human security teams cannot realistically respond at this scale.

Web3 security faces an immutability paradox. Blockchain’s permanence underpins trust, but it also severely limits the ability to rectify errors. In traditional systems, defenders can pause services or deploy hot fixes. In Web3, defenders often observe exploits unfolding in real time with limited options to intervene.

Once an exploit is live, blockchain immutability restricts corrective action, making post-incident recovery extremely difficult. The role of security in Web3 is shaped by three structural realities.

Unlike traditional financial systems, where fraudulent transactions can be frozen or reversed, Web3 operates with absolute finality. There is no undo mechanism.

Liquidity reflects perceived safety. A single vulnerability does not just cause financial loss; it can permanently damage a protocol’s credibility.

Web3 protocols do not operate in isolation. They function as interconnected money legos, relying on oracles, bridges, and external liquidity. A protocol’s security is constrained by its weakest dependency.

As a result, effective audits must move beyond isolated code review. They must assess behavioral integrity within a broader, adversarial system. A manipulated oracle or compromised dependency can trigger cascading failures, even when internal logic is correct.

Because smart contracts are open-source and composable by design, the concept of a fixed security perimeter is becoming obsolete. The attack surface extends across every integrated protocol, oracle, and bridge.

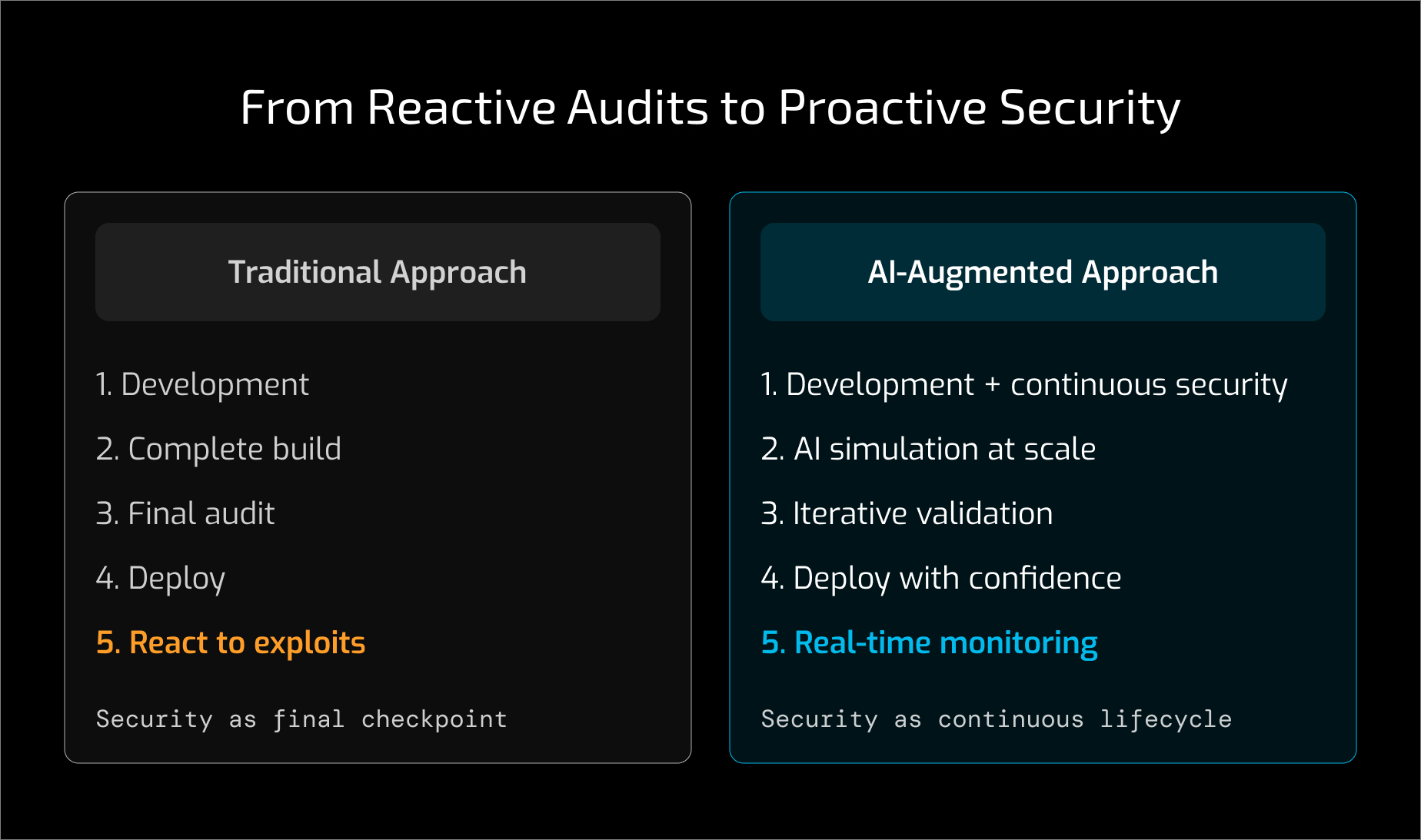

This hyper-connected environment demands a shift from reactive patching to proactive security. Since mainnet failures are often irreversible, security must be integrated upstream into the development process itself.

Security can no longer be treated as a final audit step. As security shifts upstream, teams are increasingly engaging in security reviews earlier in the development process, rather than treating audits as a final checkbox before launch. It must become an adversarial process that runs in parallel with development from the first line of code. This requires tighter collaboration between developers and security researchers throughout the lifecycle.

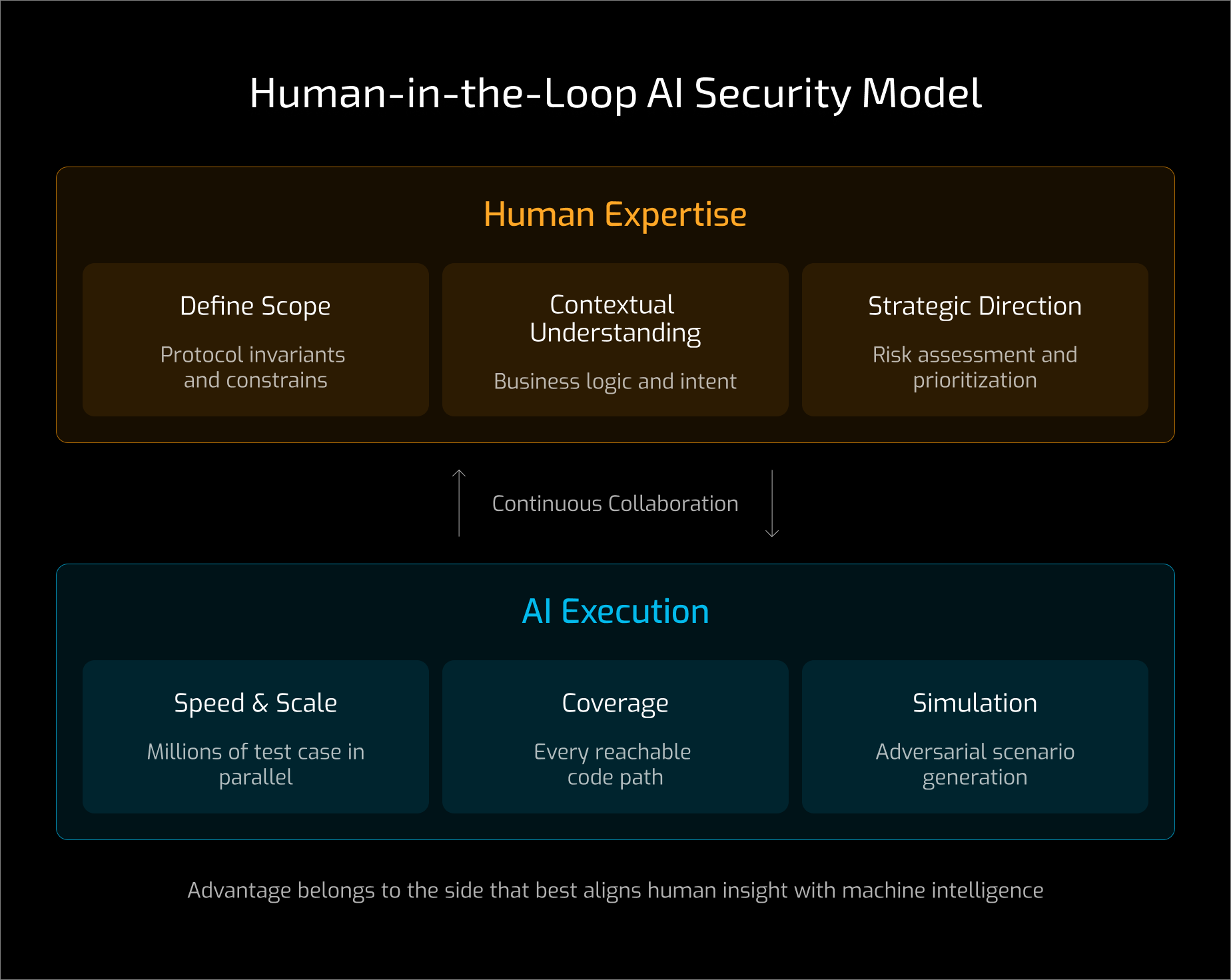

In this model, AI becomes a force multiplier. Security researchers define constraints and failure conditions, while AI executes validation at scale.

Researchers identify protocol invariants and design complex scenarios such as flash loans or cross-protocol liquidations to guide AI exploration. AI systems then generate millions of high-frequency inputs using reinforcement learning and coverage-guided fuzzing to test every reachable state and code path against those invariants.

This is a human-in-the-loop system. Human expertise defines scope and intent, while AI delivers speed and breadth of analysis.

Deploying Web3 applications increasingly requires enterprise-grade stability and security. Security is no longer a one-time event but a continuous lifecycle.

Audits remain the primary defense against decentralized risk, but the industry is moving toward AI-augmented approaches that combine contextual understanding with large-scale simulation, as seen in modern smart contract audits. Researchers first build deep contextual understanding through collaboration with developers, then use AI to expand coverage.

The researcher’s role shifts from bug hunting to translating business logic into machine-readable constraints.

Researchers extract invariants that must always hold, distinguish intended behavior from griefing paths, and provide documentation, whitepapers, and post-mortems so AI evaluates the full risk surface rather than isolated code paths.

Once grounded in context, AI systems generate adversarial simulations, executable proof-of-concept exploits using tools such as Foundry or Hardhat, and targeted fuzz tests for complex logic paths. This approach transforms security research from manual exploration into large-scale simulation, enabling stress testing under conditions that cannot be replicated by hand.

Security does not end at deployment. AI-driven systems now monitor live mainnet activity, mapping account relationships and identifying sybil clusters or attacker-funded wallets before interaction occurs.

These systems move beyond alerts. They enable protocols to automatically intercept or block transactions flagged as critical before execution, shifting security from reactive analysis to preemptive control.

Offensive and defensive AI evolve in tandem. Attackers probe defenses, and defenders retrain models in response. Each advance triggers a countermeasure.

This feedback loop increasingly plays out in simulated environments where AI agents train against one another across multiple iterations. As attack capabilities improve, defensive systems evolve to evaluate risk earlier in development and deployment.

The contest between offensive and defensive AI in Web3 security is already underway. AI agents can autonomously discover and exploit smart contract vulnerabilities, and the same technology is being used to detect and prevent them.

Advantage will belong to the side that best aligns human insight with machine intelligence.

The future of Web3 security depends not only on how capable our systems are, but on how deliberately they are built. In practice, this means deeper collaboration between developers and security researchers, with AI embedded throughout the development lifecycle.

In the end, better AI will win.

The open question is who will wield it more effectively.